代写CSC325 COURSEWORK 2

COURSEWORK 2 Natural Language Processing

CS-265/CSC325 Ar@ficial Intelligence

Released: 22 March 2024

Due: Thursday 29 April 2024, 11am. This is a hard deadline and set in rela-on to other submission

deadlines.

Read and think through the whole coursework before star5ng to program. Review the NLP lectures and

lab. See it as engineering a language mechanism, which you can experiment with and develop. The focus

is on how features are used at different points in the grammar and lexicon to control well-formedness.

Follow the instruc5ons fully and accurately. Marks are taken off for wrong or missing answers.

Address the following in the coursework

1. Write a DCG which parses input sentences and outputs a parse.

2. The DCG must parse sentences with the following features. The features a, b, c, d, and j are

related to the lab. The others - e, g, h, k, and l - are addi5ons that interact with other parts of the

grammar and are explained further below.

a. Transi5ve and intransi5ve verbs

b. Common nouns

c. Determiners (e.g., ``a'', ``the'', and ``two'')

d. Subject/object singular/plural pronouns (e.g., ``he'', ``him'', ``I", ``we'')

e. Pronouns with gramma5cal person (e.g., ``I'', ``you'', ``she'')

f. Singular/plural nouns (e.g., chair/chairs)

g. Adjec5ves and adjec5val phrases

h. Preposi5ons and preposi5onal phrases

i. Subject-verb agreement for person, number, and animacy

j. Determiner-common noun number agreement

k. Animacy agreement between the subject and verb

l. Passive and ac5ve sentences

3. The DCG should separate grammar and lexicon. The lexicon must be included in your code and

include all the words and word forms in the lexicon below or those you should add to the

lexicon.

4. The sample outputs (below) should be carefully studied and emulated by the parser. It must be

emphasised that the outputs are phrase structures of the input sentence; it is not sufficient

just to recognise if a sentence is well-formed according to the grammar. The categories, e.g.,

nbar, jp, adj, n, as well as the parsing structure, e.g., nbar(jp(adj(tall), jp(adj(young), n(men)))),

should appear in the output. Output that misses categories and parsing structures will be

marked down. While there may be different ways to write the grammar, the input and output

must be precise and fixed, as the results will be automa5cally checked. If the output does not

match the intended output, you can be marked down on that output.

Demonstra@on of Work

To demonstrate that your code works as intended, your code should correctly give the parse trees for the

gramma5cal sentences in the list of test sentences (below) and fail for the ungramma5cal sentences. In

the list of test sentences below, an ungramma5cal sentence is indicated with a * next to it, for example,

"*the men sees the apples" is ungramma5cal. We are not concerned with capitalisa5on or punctua5on.

For each sentence in the list of test sentences, query your parser; if the sentence is ungramma5cal, the

output should be false/fails; if the sentence is gramma5cal, the outputs should be the correct parse. An

excep5on in your program means there is a problem and no marks are given.

Generally, if issues or problems arise, report these in a discussion sec5on.

Your grammar should at least parse and provide the phrase structure for every sentence in the test

sentences (below). As well, for evalua5on, there will be unseen sentences that your grammar should

parse and generate the phrase structure or fail to parse, given the instruc5ons and the lexicon.

There are extensions that you are to make to the lexicon - read the instruc5ons carefully all the way

through to fully understand what needs to be done.

Submission

To submit your coursework, it should be one file with your grammar. The file name should a Prolog file in

the form of:

YOUR-STUDENT-NUMBER_AI_NLP_2024.pl

The files will be submiced on TurnItIn (the link to be provided). The grammars will be automa5cally run

and unit tested; that is, we will run your grammar against all the seen test sentences as well as unseen

sentences that your grammar should parse or fail to parse given the instruc5ons and the lexicon. This

also means that if you have anything in your file which is not Prolog code (comments, discussion,

examples, etc), then it should appear commented out.

Discussion

If you are inclined to engage with further discussion, issues, work on other examples, make observa5ons,

add further extensions, or even other languages, you are welcome to share it in an email document sent

to me (Adam Wyner). There is no addi5onal mark per se. You will get remarks in return from the

lecturer. Make sure you indicate your student number and name on your document.

Marking Scheme

The overall mark for the coursework is 15 marks. 60% of these marks are for correct output of parsed

sentences (seen and unseen data) and 40% of these marks are for a well-formed grammar. Incorrect

output (wholly or par5ally) are deducted propor5onately. A well-formed grammar uses DCGs (not

difference lists), uses the full lexicon with addi5ons (see below), uses the indicated gramma5cal

categories and phrasal units, and reduces redundancy or complexity as much as is feasible (see below for

further notes).

Test Sentences

* means the grammar should fail on these sentences. Some of the sentences may seem gramma5cal

given other interpreta5ons, which is discussed further below. Accept the gramma5cality judgements

given here, though they are open to discussion. Below, there are listed sentences; further below, there

are addi5onal sample input/outputs.

1. the woman sees the apples

2. a woman knows him

3. *two woman hires a man

4. two women hire a man

5. she knows her

6. *she know the man

7. *us see the apple

8. we see the apple

9. i know a short man

10. *he hires they

11. two apples fall

12. the apple falls

13. the apples fall

14. i sleep

15. you sleep

16. she sleeps

17. *he sleep

18. *them sleep

19. *a men sleep

20. *the tall woman sees the red

21. the young tall man knows the old short woman

22. *a man tall knows the short woman

23. a man on a chair sees a woman in a room

24. *a man on a chair sees a woman a room in

25. the tall young woman in a room on the chair in a room in the room sees the red apples under

the chair

26. the man sleeps

27. *the room sleeps

28. *the apple sees the chair

29. *the rooms know the man

30. the apple falls

31. the man falls

32. the man breaks the chairs

33. the chairs are broken

34. the chairs are broken by the man

35. the chair is broken by the men

36. *the chair is broken by the apple

37. the room is hired by the man

38. *the chair broken by the man

39. a man is bicen by the dog

40. *a man is bicen the woman by the dog

41. *the chair falls by the man

42. *a man on a chair sees a woman by her

43. *a man on a chair sees a woman by she

44. *a man on a chair sees a woman by the woman

45. a man on a chair sees a woman on a chair

In addi5on to these 45 sentences, there 15 sample input and parses below that you should use to

develop and test your grammar.

The 60 sentences is the data set that you can use to develop and test your grammar. However, there will

be more in the unseen tes5ng data set, using the same lexicon with the same parameters of the

grammar as described below. If your grammar parses and generates the phrase structure for the seen

examples, it should, assuming you’ve designed the grammar well, also parse and generate the phrase

structure for the unseen sentences.

Modeling the Sample Inputs and Parses

Below, in different sec5ons, you will find sample model inputs and outputs. They highlight the categories

and structures that your grammar should recognise and output their structure. The output in the

examples should be carefully studied and emulated by the grammar. The categories (below), e.g., nbar,

jp, adj, n, byPass, and parsing structures (below), e.g., nbar(jp(adj(tall), jp(adj(young), n(men)))), should

appear correctly in the output. Output that misses categories and parsing structures will be marked

down. The examples also illustrate the predicate that can be called. It is essen5al that you use the form

of this predicate and that your grammar produce these outputs in these forms. Obviously, the task of

your grammar is to take the input and provide the output, so you know the intended target.

Comments and Tips

The focus of the grammar in the coursework is on how features are used to ``guide'' well-formedness of

parses.

Your grammar ought to provide only one parse for each input sentence. Check that it makes sense given

the specifica5on. If there is more than one parse, there is something to revise in your grammar.

In a long parse, you might see .... your parse. This means that the parse is very long and Prolog is

trunca5ng it. If you want to see the full parse, let the lecturer know and a predicate can be circulated on

Canvas.

The length of the parses should be propor5onal to the length of the input sentence. If you have very long

parses for a rela5vely short sentence, then something is wrong with your grammar.

The grammar you are wri5ng should recognize and output the parse of the relevant sentences (those in

the seen data and others unseen rela5ve to the lexicon, sample parses, and gramma5cal construc5ons)

and fail on others. If you generate more sentences or provide further examples for parsing, you will

quickly see that there are many odd or ungramma5cal sentences that this grammar recognises. You will

also see that some sentences can be gramma5cal and given a different interpreta5on of some of the

parts of speech, e.g., the by-phrase only appears in the passive in this grammar, but in the meaning of

``alongside'' would be gramma5cal. In this sense, a grammar is a theory which you can develop and

evaluate incrementally with respect to the data. Wri5ng a large scale grammar for a fragment of natural

language must take into account a range of proper5es, e.g. ordering of preposi5onal phrases, alterna5ve

interpreta5ons, seman5c restric5ons, seman5c representa5ons, pragma5cs, etc., which we are not

addressing in this coursework. Going ``hard core'' in the world of computa5onal linguis5c parsing and

seman5c representa5on means facing lots of hard, complex, and very interes5ng issues of natural

language.

During development, you can also visualise the parse trees in SWISH (and probably not in your local SWIProlog installa5on). The predicate for this will be circulated on Canvas.

In general, your grammar file should only include the grammar and lexicon and no further Prolog

direc5ves (those lines that start with :) or predicates not part of the grammar and lexicon, i.e., such as

you might use in SWISH for parse trees.

Notes on Gramma@cal Construc@ons

The notes here which extend the topics found in Lab 6, so take them together.

Nouns (common and pronoun) carry features. This is explicit on pronoun forms (e.g. he/him, I/we),

where have features such as number (singular/plural) and case (nomina5ve/accusa5ve). The

gramma5cal role (subject/object) is related to the case on nouns, where the cases are nomina5ve and

accusa5ve and align respec5vely with subject and object (broadly); that is, case forms of nouns indicate

the role the noun has with respect to the verb.

Phrases and Structure

It is reasonable to have more than one rule for similar phrasal categories, where there is a significant

reason to warrant them. For instance, we have transi5ve verbs (must have an object) and intransi5ve

verbs (cannot have an object); you might have two different VP rules to represent this. Preposi5onal

phrases are op5onal inside Noun Phrases; the byPass preposi5onal phrases are op5onal inside Verb

Phrases. You might have two different NPs for the former, and two different VPs for the lacer. We do not

assume that binary branching is necessary, so a phrase might have more than two cons5tuents (parts)

within it.

s(Tree, [the,man,sleeps], []).

s(np(det(the),nbar(n(man))),vp(v(sleeps)))

s(Tree, [the,woman,sees,the,apples], []).

s(np(det(the),nbar(n(woman))),vp(v(sees),np(det(the),nbar(n(apples)))))

s(Tree, [the,woman,sees,the,apples,in,the,room], []).

s(np(det(the),nbar(n(woman))),vp(v(sees),np(det(the),nbar(n(apples)),pp(prep(in),np(det(the),n

bar(n(room)))))))

Pronouns

Pronouns (e.g. he/him, I/we) have features such as number (singular/plural) and gramma5cal role

(subject/object). The gramma5cal role is related to the case on nouns, that is, forms of nouns that

indicate what role the noun has with respect to the verb. In English, there are three cases - Nomina5ve,

Accusa5ve, and Geni5ve. The last we ignore. Pronouns show this most clearly in English - ``she'' is a

pronoun in the nomina5ve form, while ``her'' is a pronoun in the accusa5ve form. When a pronoun is in

the subject posi5on, it must appear in the nomina5ve form; when a pronoun is in the object posi5on, it

must appear in the accusa5ve form. In addi5on, pronouns have features such as gramma5cal person,

e.g. first person ``i'', second person ``you'', third person ``she''. Gramma5cal person indicates a closer or

more distant rela5onship between the speaker of the sentence and other persons: "I see the apple"

represents the most personal statement (first person); ``You see the apple'' is between the speaker and a

person who is immediately present; and ``He sees the apple'' is the most distant, as it can relate to a

person who is not immediately present or somehow less ``relevant''.

The lexicon shows the features of pronouns number, case, gramma5cal person, and animacy (whether it

is a cogni5ve en5ty).

s(Tree,[she,knows,her],[]).

Tree = s(np(pro(she)), vp(v(knows), np(pro(her)))).

s(Tree,[her,knows,she],[]).

false.

Agreement

Pronouns and noun phrases show several features. A noun phrase (and pronouns in par5cular) must

agree with the verb in several ways, that is, the number, case, person, and animacy features of the noun

phrase must be compa5ble with those features of the verb - this is how the structure of the mechanism

'locks' together. While sentences have subject and object posi5ons in sentences, these are reflected in

the order of arguments rather than some addi5onal feature.

Structures for NPs with Adjec-ves and Preposi-onal Phrases

For our purposes, an adjec5ve such as ``tall'' describes a property of a common noun such a man. The

adjec5ve precedes the noun. For example: ``the tall man sees the woman'' is gramma5cal; ``the man tall

sees the woman'' is ungramma5cal. You can have any number of adjec5ves, for example: ``the tall tall

old man sees the woman''; ``the tall tall old old man sees the woman'', even if a bit odd, we'll accept as

gramma5cal.

For our purposes, a preposi5onal phrase modifies a noun without restric5on, and it is made up of a

preposi5on and a noun phrase. The preposi5on provides informa5on about the rela5ve loca5ons of the

nouns i.e., the noun that is modified and the noun within the preposi5onal phrase. The preposi5onal

phrase follows the noun that it modifies: ``the man in the room sees a woman on a chair''. We see them

as a rela5on between ``man'' and ``room''. You can have any number of preposi5onal phrases, for

example: ``the woman in a room on the chair in a room in the room sees the man''. We could have a

preposi5onal phrase modifying a verb as in ``the woman sleeps in the room'', but do not for this

coursework.

As an adjec5ve or preposi5onal phrase modifies a noun phrase, it can appear with the noun phrase in

either the subject or the object posi5on.

An adjec5ve or preposi5onal phrase is op5onal in the sense that not having them in a sentence results in

a sentence that is s5ll gramma5cal. However, there is a loss of meaning

As a hint about the grammar of adjec5ves and preposi5onal phrases in noun phrases, see the phrase

tree for sample sentences below. They indicate the gramma5cal structure of the categories and phrase

structure for adjec5ves and preposi5onal phrases in noun phrases; though somewhat complicated, it

shows the variety of structures. While the gramma5cal structure of jp and nbar are unfamiliar, we can

take them as given. Use these categories and phrase structures for your grammar. Given such input (and

similar), your parser should produce the same sort of output:

s(Tree, [the, woman, on, two, chairs, in, a, room, sees, two, tall, young, men], []).

Tree = s(np(det(the), nbar(n(woman)), pp(prep(on), np(det(two), nbar(n(chairs)), pp(prep(in),

np(det(a), nbar(n(room))))))), vp(v(sees), np(det(two), nbar(jp(adj(tall), jp(adj(young),

n(men)))))))

s(Tree, [the, woman, in, a, room, sees, two, young, men], []).

s(np(det(the),nbar(n(woman)),pp(prep(in),np(det(a),nbar(n(room))))),vp(v(sees),np(det(two),nb

ar(jp(adj(young),n(men))))))

All this said, there is a difference between ``ordinary'' preposi5onal phrases and a par5cular

preposi5onal phrase that appears in the passive. For our purposes, we will differen5ate them. See

below.

The Passive

Passive and ac5ve sentences are closely related:

the dog bites the woman. (ac5ve)

s(Tree,[the,dog, bites, the, woman],[]).

s(np(det(the),nbar(n(dog))),vp(v(bites),np(det(the),nbar(n(woman)))))

the woman is bicen by the dog. (passive)

s(Tree,[the,woman, is, bicen, by, the, dog],[]).

s(np(det(the),nbar(n(woman))),vp(aux(is),v(bicen),byPrepP(byPrep(by),np(det(the),nbar(n(dog))

))))

The passive is part of a much more widespread and diverse family of gramma5cal construc5ons called

diathesis alterna5ons. In diathesis alterna5ons, the arguments of the verb appear in alterna5ve posi5ons

yet with largely the same meaning. The passive and ac5ve sentences above mean the same thing, but

given in a different way and with some different rhetorical uses. Other examples of diathesis:

Direct-Indirect Object: A woman gives a book to a man; A woman gives a man a book

Causa5ve: The woman broke the chair; the chair was broken

We only consider the passive. While the past tense would be nicest, we have kept to the present tense

(doesn't really macer). There are several characteris5cs of the Passive construc5on in English:

• The posi5ons and case of the noun phrases change: what is the object (accusa5ve) NP in the

ac5ve sentences is the subject (nomina5ve) in the passive; what is the subject (nomina5ve and

'animate doer') NP in the ac5ve is in a par5cular preposi5onal phrase which represents the

'animate doer' of the ac5on.

she hires him.

s(Tree, [she,hires,him], []).

s(np(pro(she)),vp(v(hires),np(pro(him))))

he is hired by her.

s(Tree, [he,is,hired,by,her], []).

s(np(pro(he)),vp(aux(is),v(hired),byPrepP(byPrep(by),np(pro(her)))))

*her hires him.

s(Tree, [her,bites,him], []).

false.

*he is hired by she.

s(Tree, [he,is,hired,by,she], []).

false

• An ``auxiliary'' or ``helper'' verb (a form of ``to be’’ in this lexicon) is introduced in the passive.

Without the auxiliary, it might be read as a nominal, which is another macer.

*the woman bicen by the dog.

s(Tree,[the,woman, bicen, by, the, dog],[]).

false

• While the subject of the verb must be animate in the ac5ve, it need not be in the passive.

s(Tree, [the,woman,breaks,the,chair], []).

s(np(det(the),nbar(n(woman))),vp(v(breaks),np(det(the),nbar(n(chair)))))

s(Tree, [the,apple,breaks,the,chair], []).

false

s(Tree, [the,chair,is,broken,by,the,woman], []).

s(np(det(the),nbar(n(chair))),vp(aux(is),v(broken),byPrepP(byPrep(by),np(det(the),nbar(

n(woman))))))

• The verb in the ac5ve appears in a ``past par5ciple'' form in the passive.

The dog bites the woman.

The woman is bicen by the dog.

s(Tree, [the,woman,is,bites,by,the,dog], []).

false

• The par5cular preposi5onal phrase is op5onal without loss of meaning. This is in contrast with

dropping ``ordinary'' preposi5onal phrases.

The woman is bicen by the dog.

s(Tree,[the,woman,is,bicen],[]).

s(np(det(the),nbar(n(woman))),vp(aux(is),v(bicen)))

• Note the phrase structure. The auxiliary, the passive par5ciple, and the par5cular preposi5onal

phrase all appear together at the same ``level'' in the phrase structure in the VP. This is in

contrast to ordinary preposi5onal phrases which modify noun phrases.

The woman is bicen by the dog.

s(Tree, [the,woman,in,the,room,is,bicen,by,the,dog,in,the,room], []).

s(np(det(the),nbar(n(woman)),pp(prep(in),np(det(the),nbar(n(room))))),vp(aux(is),v(bic

en),byPrepP(byPrep(by),np(det(the),nbar(n(dog)),pp(prep(in),np(det(the),nbar(n(room))

))))))

The Lexicon

The lexicon should include all the following words that appear, where the components of each lexical

entry are as given. This is not the form that your code requires, but is a helpful hint.

The grammar must treat the features in the lexicon.

%%%%%%%%%%%% Lexicon %%%%%%%%%%%%%%

% The lexicon should include all the following words that appear, where the components of each lexical

% entry are as given. For clarity, the lexicon is given in the form that your code would require.

% The grammar must treat the features in the lexicon.

% Note that there are some lexical forms that your grammar would require, but are missing in the lis5ng

below.

%%% Pronouns %%%

% For pronouns, the informa5on appears in the following order: word, gramma5cal category (pronoun),

% number (singular/plural), gramma5cal person (1st, 2nd, or 3rd), and gramma5cal role (subject or

object)

lex(i,pro,singular,1,nom,ani).

lex(you,pro,singular,2,nom,ani).

lex(he,pro,singular,3,nom,ani).

lex(she,pro,singular,3,nom,ani).

lex(it,pro,singular,3,nom,ani).

lex(we,pro,plural,1,nom,ani).

lex(you,pro,plural,2,nom,ani).

lex(they,pro,plural,3,nom,ani).

lex(me,pro,singular,1,acc,ani).

lex(you,pro,singular,2,acc,ani).

lex(him,pro,singular,3,acc,ani).

lex(her,pro,singular,3,acc,ani).

lex(it,pro,singular,3,acc,ani).

lex(us,pro,plural,1,acc,ani).

lex(you,pro,plural,2,acc,ani).

lex(them,pro,plural,3,acc,ani).

%%% Common Nouns %%%

% For common nouns, the informa5on appears in the following order: word, gramma5cal category

(noun), number

lex(man,n,singular,_,_,ani).

lex(woman,n,singular,_,_,ani).

lex(dog,n,singular,_,_,ani).

lex(apple,n,singular,_,_,nani).

lex(chair,n,singular,_,_,nani).

lex(room,n,singular,_,_,nani).

% Thema5c rules we won't use.

%lex(X,n,singular,_,_,agent) :- lex(X,n,singular,_,_,ani).

%lex(X,n,singular,_,_,experiencer) :- lex(X,n,singular,_,_,ani).

lex(men,n,plural,_,_,ani).

lex(women,n,plural,_,_,ani).

lex(dogs,n,singular,_,_,ani).

lex(apples,n,plural,_,_,nani).

lex(chairs,n,plural,_,_,nani).

lex(rooms,n,plural,_,_,nani).

%%% Verbs %%%

% For verbs, the informa5on appears in the following order: word, gramma5cal category (verb), number

(singular/plural),

% gramma5cal person (1st, 2nd, 3rd)

%%% Transi5ve Verbs %%%

% Note that we do not have in this example Lexicon past par5ciples for every transi5ve verb:

% know, see, hire

lex(know,tv,singular,1,ani).

lex(know,tv,singular,2,ani).

lex(knows,tv,singular,3,ani).

lex(know,tv,plural,_,ani).

lex(see,tv,singular,1,ani).

lex(see,tv,singular,2,ani).

lex(sees,tv,singular,3,ani).

lex(see,tv,plural,_,ani).

lex(hire,tv,singular,1,ani).

lex(hire,tv,singular,2,ani).

lex(hires,tv,singular,3,ani).

lex(hire,tv,plural,_,ani).

lex(break,tv,singular,1,ani).

lex(break,tv,singular,2,ani).

lex(breaks,tv,singular,3,ani).

lex(break,tv,plural,_,ani).

lex(bite,tv,singular,1,ani).

lex(bite,tv,singular,2,ani).

lex(bites,tv,singular,3,ani).

lex(bite,tv,plural,_,ani).

%%% Past Par5ciple %%%

% These verb forms are used in the passive.

% Only the tv verbs can be passivized. We don't provide them all in this lis5ng,

% but as part of the coursework, you should add the pastPart of other tv verbs to the lexicon

% so as to recognise some of the sentences above and others.

% Note that verbs in the passive to not require animate subjects, though this is required of

% the transi5ve forms.

%

% You will need to add to the lexicon past par5ciple forms for all those verbs that are transi5ve above.

lex(broken,pastPart,singular,1,_).

lex(broken,pastPart,singular,2,_).

lex(broken,pastPart,singular,3,_).

lex(broken,pastPart,plural,_,_).

lex(bicen,pastPart,singular, 1, _).

lex(bicen,pastPart,singular, 2, _).

lex(bicen,pastPart,singular, 3, _).

lex(bicen,pastPart,plural, _, _).

%%% Intransi5ve verbs %%%

% These cannot go into the passive.

lex(fall,iv,singular,1,_).

lex(fall,iv,singular,2,_).

lex(falls,iv,singular,3,_).

lex(fall,iv,plural,_,_).

lex(sleep,iv,singular,1,ani).

lex(sleep,iv,singular,2,ani).

lex(sleeps,iv,singular,3,ani).

lex(sleep,iv,plural,_,ani).

%%% Auxiliary verbs (aux) for the passive %%%

% In this version of the lexicon, animacy of auxilary verbs is not necessary,

% though if the grammar is done in a different way, it might be.

lex(am,aux,singular,1).

lex(are,aux,singular,2).

lex(is,aux,singular,3).

lex(are,aux,plural,_).

%%% Determiners %%%

% For determiners, the informa5on appears in the following order: word, gramma5cal category

% (determiner), number

lex(the,det,_).

lex(a,det,singular).

lex(two,det,plural).

%%% Preposi5ons %%%

% For preposi5ons, the informa5on appears in the following order: word, gramma5cal category

(preposi5on)

lex(on,prep).

lex(in,prep).

lex(under,prep).

% We have a desigated category for the preposi5on for the passive by-phrase.

% When we connect to the grammar and used to recognised sentences, it will require

% an animate np. Consult the examples and discussion above.

lex(by,byPrep).

%%% Adjec5ves %%%

% For adjec5ves, the informa5on appears in the following order: word, gramma5cal category (adjec5ve)

lex(old,adj).

lex(young,adj).

lex(red,adj).

lex(short,adj).

lex(tall,adj).

请加QQ:99515681 邮箱:99515681@qq.com WX:codinghelp

- 刷新你的营销思维WhatsApp工具教你如何把握商业趋势站在风口浪尖

- 2024广东水展即将开幕 | 聚焦净水行业热点 抢占行业新机遇!

- instagram出海营销引流软件,ins强大引流群发私信助手

- 我事业成功的最佳助力Telegram群发软件,品牌安全!Telegram群发云控保障您的推广进行

- Telegram群发引流大师,高效采集用户,助你轻松拉群!

- 四川点亮饰界建筑装饰材料有限公司全屋整装给您稳重又灵动的居所

- 丽水市中心医院完成全球首例单孔机器人近端胃癌根治术(食道残胃单肌瓣吻合)

- 西部数据通过ASPICE CL3评估认证,满足汽车行业不断变化的需求

- 首个管家服务白皮书发布 万科物业重新定义物业服务

- WhatsApp营销软件/ws群发/ws协议号/ws筛选/ws接粉

- Instagram营销群发软件,Ins一键群发工具,助你实现快速推广!

- 贵州桃花源新型材料有限公司负氧离子全屋整装增添了空间的独特韵味

- Blackjack编程代做、代写c/c++程序语言

- 马斯克:SpaceX 今年将进行 144 次发射,希望八年后登陆火星

- Ins拉群软件,Instagram引流助手,让你的营销无往不利!

- 市场巨星 博主开箱 WhatsApp拉群营销工具是我业务成功的不二之选

- instagram营销推流上粉营销工具,ins打粉爆粉群发营销助手

- 全线高端 智能焕新,东软医疗强势亮相2024CMEF

- 代写Hypertension and Low Income in Toronto

- 你发现了这款WhatsApp拉群营销工具吗 快来分享一下你的使用心得吧

- 世贸通美国移民:I-956F批准后又一批EB-5投资人I-526E获批!

- WhatsApp群发协议工具/ws协议号/ws频道号/ws业务咨询大轩

- Ins引流营销助手,Instagram打粉工具,助你轻松拓展市场!

- Morgan Stanley包容性风险投资实验室为规模最大、全球化程度最高的一批初创企业举办演示日活动

- 行业领导者的选择工具 WhatsApp海外营销高手教你如何揭示市场趋势稳占商机

- 木心集团与复旦大学“奖学金”颁奖暨“实习基地”揭牌仪式,圆满举行!

- 忆联带你读懂闪存原理与颗粒类型

- 跨境电商WhatsApp代拉群助推品牌,信息传递如风般迅捷!商家营销新时代的必备工具

- Instagram群发代发工具,Ins一键群发工具,助你轻松营销!

- instagram群发采集神器,高效引流爆粉,助你成为社交达人!

推荐

-

丰田章男称未来依然需要内燃机 已经启动电动机新项目

尽管电动车在全球范围内持续崛起,但丰田章男

科技

丰田章男称未来依然需要内燃机 已经启动电动机新项目

尽管电动车在全球范围内持续崛起,但丰田章男

科技

-

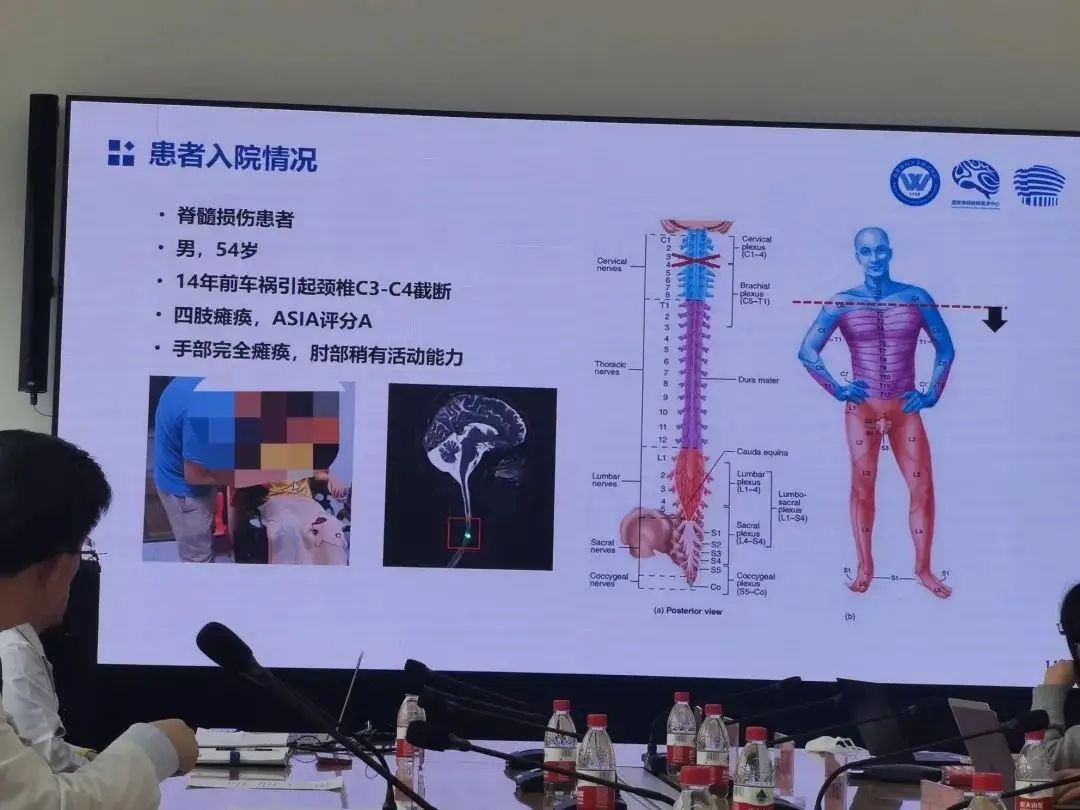

老杨第一次再度抓握住一瓶水,他由此产生了新的憧憬

瘫痪十四年后,老杨第一次再度抓握住一瓶水,他

科技

老杨第一次再度抓握住一瓶水,他由此产生了新的憧憬

瘫痪十四年后,老杨第一次再度抓握住一瓶水,他

科技

-

疫情期间 这个品牌实现了疯狂扩张

记得第一次喝瑞幸,还是2017年底去北京出差的

科技

疫情期间 这个品牌实现了疯狂扩张

记得第一次喝瑞幸,还是2017年底去北京出差的

科技

-

智慧驱动 共创未来| 东芝硬盘创新数据存储技术

为期三天的第五届中国(昆明)南亚社会公共安

科技

智慧驱动 共创未来| 东芝硬盘创新数据存储技术

为期三天的第五届中国(昆明)南亚社会公共安

科技

-

全力打造中国“创业之都”名片,第十届中国创业者大会将在郑州召开

北京创业科创科技中心主办的第十届中国创业

科技

全力打造中国“创业之都”名片,第十届中国创业者大会将在郑州召开

北京创业科创科技中心主办的第十届中国创业

科技

-

升级的脉脉,正在以招聘业务铺开商业化版图

长久以来,求职信息流不对称、单向的信息传递

科技

升级的脉脉,正在以招聘业务铺开商业化版图

长久以来,求职信息流不对称、单向的信息传递

科技

-

如何经营一家好企业,需要具备什么要素特点

我们大多数人刚开始创办一家企业都遇到经营

科技

如何经营一家好企业,需要具备什么要素特点

我们大多数人刚开始创办一家企业都遇到经营

科技

-

B站更新决策机构名单:共有 29 名掌权管理者,包括陈睿、徐逸、李旎、樊欣等人

1 月 15 日消息,据界面新闻,B站上周发布内部

科技

B站更新决策机构名单:共有 29 名掌权管理者,包括陈睿、徐逸、李旎、樊欣等人

1 月 15 日消息,据界面新闻,B站上周发布内部

科技

-

苹果罕见大降价,华为的压力给到了?

1、苹果官网罕见大降价冲上热搜。原因是苹

科技

苹果罕见大降价,华为的压力给到了?

1、苹果官网罕见大降价冲上热搜。原因是苹

科技

-

创意驱动增长,Adobe护城河够深吗?

Adobe通过其Creative Cloud订阅捆绑包具有

科技

创意驱动增长,Adobe护城河够深吗?

Adobe通过其Creative Cloud订阅捆绑包具有

科技