代做Real-time 2-D Object Recognition

AM Project 3: Real-time 2-D Object Recognition

1/6

Project 3: Real-time 2-D Object Recognition

Due Feb 24 by 11:59pm

Points 30

This project is about 2D object recognition. The goal is to have the computer identify a specified set of

objects placed on a white surface in a translation, scale, and rotation invariant manner from a camera

looking straight down. The computer should be able to recognize single objects placed in the image and

show the position and category of the object in an output image. If provided a video sequence, it should

be able to do this in real time.

Setup

For development, you can use this development image set

(https://northeastern.instructure.com/courses/175350/files/24760264?wrap=1)

(https://northeastern.instructure.com/courses/175350/files/24760264/download?download_frd=1) . Once

you have your system working, you'll need to create your own workspace for real-time recognition. If you

have a webcam, the easiest thing to do is set it up on some kind of stand facing down onto a flat surface

covered with white paper. If you have only a built-in laptop camera, find a section of white wall in front of

which you can hold the objects to be identified. Alternatively, you may be able to stream your phone's

camera video to your laptop. If you can get a setup where with a downward facing camera on a white

surface, that is the easiest thing to do. If none of those work for you, capture some videos or still images

with a phone and set up your program to process single images or video sequences.

Your system needs to be able to differentiate at least 5 objects of your choice. Pick objects that are

differentiable by their 2D shape and are mostly a uniform dark color, similar to the dev image set. The

goal of this project is a real-time system, but if that is not feasible given your setup, you can have your

system take in a directory of images or a pre-recorded video sequence and process them instead. Make

sure it is clear in your report which type of system you build.

If you are working on your own, you must write the code from scratch for at least one of the first four

tasks--thresholding, morphological filtering, connected components analysis, or moment calculations.

Otherwise, you may use OpenCV functions. If you are working in pairs, you must write the code for two

of the first four tasks from scratch.

Tasks

1. Threshold the input video

Using the video framework from the first project (if you wish), start building your OR system by

implementing a thresholding algorithm of some type that separates an object from the background.

AM Project 3: Real-time 2-D Object Recognition

2/6

Give your system the ability to display the thresholded video (remember, you can create multiple

output windows using OpenCV). Test it on the complete set of objects to be recognized to make sure

this step is working well. In general, the objects you use should be darker than the background, and

the whole area should be well-lit.

You may want to pre-process the image before thresholding. For example, blurring the image a little

can make the regions more uniform. You could also consider making strongly colored pixels (pixels

with a high saturation value) be darker, moving them further away from the white background (which

is unsaturated).

It may also be helpful to dynamically set your threshold by analyzing the pixel values. Running a k?means algorithm on a random sample of pixel values (e.g. using 1/16 of the pixels in the image) with

K=2 (also called the ISODATA algorithm) gives you the means of the two dominant colors in the

image. The value in between those means may be a good threshold to use.

Required images: Include 2-3 examples of the thresholded objects in your report

2. Clean up the binary image

Clean up your thresholded image using some type of morphological filtering. Look at your images

and decide whether they are displaying noise, holes, or other issues. Pick a strategy using

morphological filtering (for example, growing/shrinking) to try to solve the issue(s). Explain your

reasoning in your report.

Required images: Include 2-3 examples of cleaned up images in your report. Use the same images

as displayed for task 1.

3. Segment the image into regions

The next step is to run connected components analysis on the thresholded and cleaned image to get

regions. Give your system the ability to display the regions it finds. A good extension is to enable

recognition of multiple objects simultaneously (but this is not required). Your system should ignore

any regions that are too small, and it can limit the recognition to the largest N regions.

A segmentation algorithm will return a region map. If you try to display the region map directly, it won't

tell you much because the region IDs will all be similar. A better method of displaying the region map

is to ignore regions that are too small and to pick from a color palette for the remaining regions (e.g. a

list of 256 random colors). Once you have removed regions that are too small, it can be helpful to

renumber the remaining regions in sequential order. If you want to avoid color flickering in your region

map display, you will have to remember the centroid and color for each region in the prior image and

try to match centroids and colors in the next image.

OpenCV has a connected components function. If you don't write your own, use the one that also

returns the stats. If you chose to write your own, both the two-pass connected components algorithm

and the region growing methods are good algorithms to know.

AM Project 3: Real-time 2-D Object Recognition

3/6

A good strategy to use when getting started is to focus on the region that is larger than a certain size,

is most central to the image, and does not touch the image boundary. The region size, region

centroid, and region axis-oriented bounding box are all you need to make this comparison. Those

statistics are easy to compute from a region map, and they are provided by the built-in OpenCV

connectedComponentsWithStats function.

Required images: Include 2-3 examples of region maps, ideally with each region shown in a different

color. Use the same images as for the prior tasks.

4. Compute features for each major region

Write a function that computes a set of features for a specified region given a region map and a

region ID. Start by calculating the axis of least central moment and the oriented bounding box.

Display these overlaying the objects in your output.

Important: for this assignment, the purpose is to analyze regions, not boundaries. Use region-based

analysis, not boundary analysis, for generating features.

Percent filled and the bounding box height/width ratio are good features to implement first. Any

features you use should be translation, scale, and rotation invariant such as moments around the

central axis of rotation. Give your system the ability to display at least one feature in real time on the

video output. Then you can easily test whether the feature is translation, scale, and rotation invariant

by moving around the object and watching the feature value. Start with just 2-3 features and add

more later. There is an OpenCV function that will compute a set of moments. If you use it, explain

what moments it is calculating and which ones you are using as features in your report. Whatever

features you choose to use, explain what they are in your report and show the feature vector for the

images included in the report for this task.

Required images: Include 2-3 examples of regions showing the axis of least central moment and the

oriented bounding box. use the same images as for the prior tasks. Include the computed feature

vectors for each object.

5. Collect training data

Enable your system to collect feature vectors from objects, attach labels, and store them in an object

DB (e.g. a file). In other words, your system needs to have a training mode that enables you to collect

the feature vectors of known objects and store them for later use in classifying unknown objects. You

may want to implement this as a response to a key press: when the user types an N, for example,

have the system prompt the user for a name/label and then store the feature vector for the current

object along with its label into a file. This could also be done from labeled still images of the object,

such as those from a training set.

Explain in your report how your training systems works.

6. Classify new images

AM Project 3: Real-time 2-D Object Recognition

4/6

Enable your system to classify a new feature vector using the known objects database and a scaled

Euclidean distance metric [ (x_1 - x_2) / stdev_x ]. Feel free to experiment with other distance

metrics. Label the unknown object according to the closest matching feature vector in the object DB

(nearest-neighbor recognition). Have your system indicate the label of the object on the output video

stream. An extension is to detect when an unknown object (something not in the object DB) is in the

video stream or provided as a single image.

Required images: include a result image for each category of object in your report with the assigned

label clearly shown.

7. Evaluate the performance of your system

Evaluate the performance of your system on at least 3 different images of each object in different

positions and orientations. Build a 5x5 confusion matrix of the results showing true labels versus

classified labels. Include the confusion matrix in your report. Hint: you can add hooks into your code

to make this easier if you can tell the system if it is correct.

8. Capture a demo of your system working

Take a video of your system running and classifying objects. If your system works on still images,

show the visualizations your system makes. Include a link to the video in your report.

9. Implement a second classification method

Choose from the following options.

A. Implement a different classifier--something besides nearest neighbor--of your choice. For

example, implement K-Nearest Neighbor matching with K > 1. Note, KNN matching requires

multiple training examples for each object and should not use the voting method.

B. Use a pre-trained deep network (here)

(https://northeastern.instructure.com/courses/175350/files/26232326?wrap=1)

(https://northeastern.instructure.com/courses/175350/files/26232326/download?download_frd=1) to

create an embedding vector for the thresholded object (example code here

(https://northeastern.instructure.com/courses/175350/files/26232332?wrap=1)

(https://northeastern.instructure.com/courses/175350/files/26232332/download?download_frd=1) )

and use nearest-neighbor matching with either sum-squared difference or cosine distance as the

distance metric.

Explain your choice and how you implemented it. Also, compare the performance with your baseline

system and include the comparison in your report.

Extensions

Write a better GUI to show your system in action and to manage the items to your DB

AM Project 3: Real-time 2-D Object Recognition

5/6

Write more than one (or two if working in pairs) of the stages of the system from scratch.

Add more than the required five objects to the DB so your system can recognize more objects.

Enable your system to learn new objects automatically by first detecting whether an object is known

or unkown, and then collecting statistics for it if the object is unknown. Demonstrate how you can

quickly develop a DB using this feature.

Experiment with more classifiers and/or distance metrics for comparing feature vectors.

Implement both options for the last task.

Explore some of the object recognition tools in OpenCV.

Report

When you are done with your project, write a short report that demonstrates the functionality of each

task. You can write your report in the application of your choice, but you need to submit it as a pdf along

with your code. Your report should have the following structure. Please do not include code in your

report.

1. A short description of the overall project in your own words. (200 words or less)

2. Any required images along with a short description of the meaning of the image.

3. A description and example images of any extensions.

4. A short reflection of what you learned.

5. Acknowledgement of any materials or people you consulted for the assignment.

Submission

Submit your code and report to Gradescope (https://www.gradescope.com) . When you are ready to

submit, upload your code, report, and a readme file. The readme file should contain the following

information.

Your name and any other group members, if any.

Links/URLs to any videos you created and want to submit as part of your report.

What operating system and IDE you used to run and compile your code.

Instructions for running your executables.

Instructions for testing any extensions you completed.

Whether you are using any time travel days and how many.

For project 3, submit your .cpp and .h (.hpp) files, pdf report, and readme.txt (readme.md). Note, if you

find any errors or need to update your code, you can resubmit as many times as you wish up until the

deadline.

AM Project 3: Real-time 2-D Object Recognition

6/6

As noted in the syllabus, projects submitted by the deadline can receive full credit for the base project

and extensions. (max 30/30). Projects submitted up to a week after the deadline can receive full credit

for the base project, but not extensions (max 26/30). You also have eight time travel days you can use

during the semester to adjust any deadline, using up to three days on any one assignment (no fractional

days). If you want to use your time travel days, indicate that in your readme file. If you need to make use

of the "stuff happens" clause of the syllabus, contact the instructor as soon as possible to make

alternative arrangements.

Receiving grades and feedback

After your project has been graded, you can find your grade and feedback on Gradescope. Pay attention

to the feedback, because it will probably help you do better on your next assignment.

请加QQ:99515681 邮箱:99515681@qq.com WX:codinghelp

- WhatsApp拉群营销工具 海外营销高手的神奇法宝 引爆你在国际市场的激情

- 威尔特(广州)流体设备有限公司企业文化分析

- Instagram引流软件,Ins一键自动增粉,打破爆粉秘籍!

- 专业人士的疑问 WhatsApp拉群营销工具是否在海外业务中展现出超凡的效果

- Instagram引流神器 - ins自动登录/ig采集指定地区/ins群发软件

- 跨国战略部署:全球app云筛是制定全球营销计划的得力助手

- 市场巨星 博主开箱 WhatsApp拉群营销工具是我业务成功的不二之选

- 从“稳中求进”的发展新战略,看碧桂园服务的长期主义

- CE-Channel: Choose Us for Easy Access to Global Partnerships, Opening the Doors to Overseas Markets

- 数字产品交易平台的革新力量

- 电报群发软件推荐,Telegram自动采集群发工具/TG协议号购买

- 风气扬帆|第九届上海空气新风展报名通道开启,邀您共赴空净行业盛会!

- 精细化数据海外营销:zalo代筛料子专业团队为您打造一手数据精品

- 数据摆在面前 WhatsApp拉群营销工具助我在全球范围内建立了稳健的品牌形象

- COMP 2049 代做代写 c++,java 编程

- WhatsApp营销软件,ws拉群业务/ws协议号/ws美国号/ws业务咨询大轩

- 独创心法 引爆关注 WhatsApp拉群营销工具为你的消息赋予别样创意 激发用户兴趣

- Ins群发脚本营销软件,Instagram一键群发工具,让你打造营销新格局!

- 成年人高度近视矫正方式如何选?北京爱尔新力眼科赵可浩院长这样说!

- InBody发布2024全球人体成分分析报告

- CIT 594代做、代写Python设计编程

- 代写ITP4905 Object Oriented Programming

- Instagram群发筛选软件,Ins群发注册工具,助你轻松营销!

- 群发云控怎样有利于海外营销

- instagram2024年最强群发营销软件来袭!ins自动推广引流利器

- Ins引流工具,Instagram营销软件,助你实现市场吸粉领先!

- Instagram群发代发工具,Ins一键群发工具,助你轻松营销!

- Instagram群发筛选营销软件,Ins群发注册工具,一键助你轻松推广!

- Ins拉群软件,Instagram引流助手,让你的营销如虎添翼!

- 解决广告费用问题 WhatsApp拉群工具助您实现精打细算广告成本

推荐

-

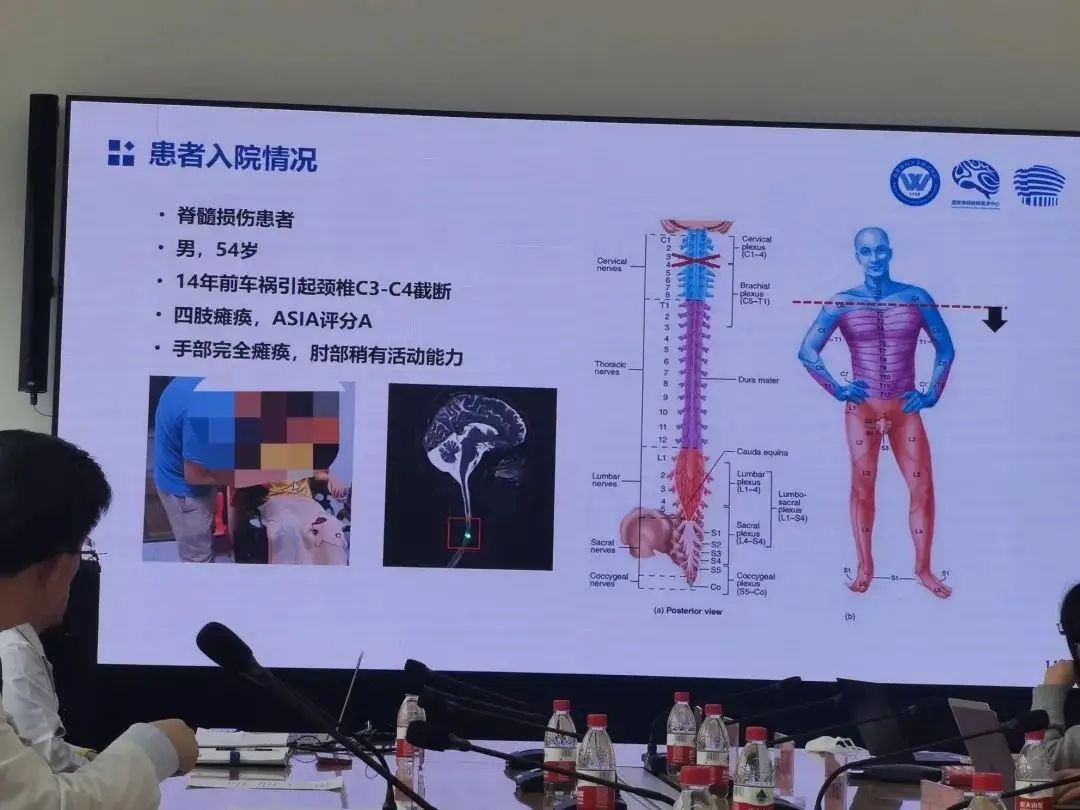

老杨第一次再度抓握住一瓶水,他由此产生了新的憧憬

瘫痪十四年后,老杨第一次再度抓握住一瓶水,他

科技

老杨第一次再度抓握住一瓶水,他由此产生了新的憧憬

瘫痪十四年后,老杨第一次再度抓握住一瓶水,他

科技

-

苹果罕见大降价,华为的压力给到了?

1、苹果官网罕见大降价冲上热搜。原因是苹

科技

苹果罕见大降价,华为的压力给到了?

1、苹果官网罕见大降价冲上热搜。原因是苹

科技

-

升级的脉脉,正在以招聘业务铺开商业化版图

长久以来,求职信息流不对称、单向的信息传递

科技

升级的脉脉,正在以招聘业务铺开商业化版图

长久以来,求职信息流不对称、单向的信息传递

科技

-

全力打造中国“创业之都”名片,第十届中国创业者大会将在郑州召开

北京创业科创科技中心主办的第十届中国创业

科技

全力打造中国“创业之都”名片,第十届中国创业者大会将在郑州召开

北京创业科创科技中心主办的第十届中国创业

科技

-

B站更新决策机构名单:共有 29 名掌权管理者,包括陈睿、徐逸、李旎、樊欣等人

1 月 15 日消息,据界面新闻,B站上周发布内部

科技

B站更新决策机构名单:共有 29 名掌权管理者,包括陈睿、徐逸、李旎、樊欣等人

1 月 15 日消息,据界面新闻,B站上周发布内部

科技

-

丰田章男称未来依然需要内燃机 已经启动电动机新项目

尽管电动车在全球范围内持续崛起,但丰田章男

科技

丰田章男称未来依然需要内燃机 已经启动电动机新项目

尽管电动车在全球范围内持续崛起,但丰田章男

科技

-

智慧驱动 共创未来| 东芝硬盘创新数据存储技术

为期三天的第五届中国(昆明)南亚社会公共安

科技

智慧驱动 共创未来| 东芝硬盘创新数据存储技术

为期三天的第五届中国(昆明)南亚社会公共安

科技

-

疫情期间 这个品牌实现了疯狂扩张

记得第一次喝瑞幸,还是2017年底去北京出差的

科技

疫情期间 这个品牌实现了疯狂扩张

记得第一次喝瑞幸,还是2017年底去北京出差的

科技

-

如何经营一家好企业,需要具备什么要素特点

我们大多数人刚开始创办一家企业都遇到经营

科技

如何经营一家好企业,需要具备什么要素特点

我们大多数人刚开始创办一家企业都遇到经营

科技

-

创意驱动增长,Adobe护城河够深吗?

Adobe通过其Creative Cloud订阅捆绑包具有

科技

创意驱动增长,Adobe护城河够深吗?

Adobe通过其Creative Cloud订阅捆绑包具有

科技