COMP52715 代做、代写 Python设计编程

COMP52715 Deep Learning for Computer Vision & Robotics (Epiphany Term, 2023-24)

Summative Coursework - 3D PacMan

Coursework Credit - 15 Credits Estimated Hours of Work - 48 Hours Submission Method - via Ultra

Release On: February 16 2024 (2pm UK Time)

Due On: March 15 2024 (2pm UK Time)

– All rights reserved. Do NOT Distribute. –

Compiled on November 16, 2023 by Dr. Jingjing Deng

1

1.

2.

3.

4.

5.

6.

Coursework Specification

This coursework constitutes 90% of your final mark for this module, where there are two mandatory tasks: Python programming and report writing. You must upload your work to Ultra before the deadline specified on the cover page.

The other 10% will be assessed separately based on seminar participation. There are 3 seminar sessions in total, the mark awarding rule is as such: (A) participating in none=0%, (B) participating in 1 session=2%, (C) participating in 2 sessions=5%, (D) participating in all sessions=10%.

This coursework is to be completed by students working individually. You should NOT ask for help from your peers, lecturer, and lab tutors regarding the coursework. You will be assessed on your code and report submissions. You must comply with the University rules regarding plagiarism and collusion. Using external code without proper referencing is also considered as breaching academic integrity.

Code Submission: The code must be written in Jupyter Notebook with appropriate comments. For constructing deep neural network models, use PyTorch1 library only. Zip Jupyter Note- book source files (*.ipynb), your dataset (if there is any new), pretrained models (*.pth), and a README.txt (code instruction) into one single archive. Do NOT include the original “Pac- Man Helper.py”, “PacMan Helper Demo.ipynb”, “PacMan Skeleton.ipynb”, “TrainingImages.zip”, “cloudPositions.npy” and “cloudColors.npy” files. Submit a single Zip file to GradeScope - Code entry on Ultra.

Report Submission: The report must NOT exceed 5 pages (including figures, tables, references and supplementary materials) with a single column format. The minimum font size is 11pt (use Arial, Calibri, Times New Roman only). Submit a single PDF file to GradeScope - Report entry on Ultra.

Academic Misconduct is a major offence which will be dealt with in accordance with the University’s General Regulation IV – Discipline. Please ensure you have read and understood the University’s regulations on plagiarism and other assessment irregularities as noted in the Learning and Teaching Handbook: 6.2.4: Academic Misconduct2.

Figure 1: The mysterious PhD Lab.

1 https://pytorch.org/

2 https://durhamuniversity.sharepoint.com/teams/LTH/SitePages/6.2.4.aspx

1

2 Task Description (90% in total)

2.1 Task 1 - Python Programming (40% subtotal)

In this coursework, you are given a set of 3D point-clouds with appearance features (i.e. RGB values). These point-clouds were collected using a Kinect system in a mysterious PhD Lab (see Figure.1). Several virtual objects are also positioned among those point clouds. Your task is to write a Python program that can automatically detect those objects from an image and use them as anchors to collect the objects and navigate through the 3D scene. If you land close enough to the object it will be automatically captured and removed from the scene. A set of example images that contain those virtual objects are provided. These example images are used to train a classifier (basic solution) and an object detector (advanced solution) using deep learning approaches in order to locate the targets. You are required to attempt both basic and advance solutions. “PacMan Helper.py” provides some basic functions to help you complete the task. “PacMan Helper Demo.ipynb” demonstrates how to use these functions to obtain a 2D image by projecting 3D point-clouds onto the camera image-plane, and how to re-position and rotate the camera etc. All the code and data are available on Ultra. You are encouraged to read the given source codes, particularly “PacMan Skeleton.ipynb”.

Detection Solution using Basic Binary Classifier (10%). Implement a deep neural network model that can classify the image patch into two categories: target object and background. You can use the given images to train your neural network. It then can be used in a sliding window fashion to detect the target object in a given image.

Detection Solution using Advance Object Detector (10%). Implement a deep neural network model that can detect the target object from the image. You may manually or automatically create your own dataset for training the detector. The detector will predict bounding boxes that contain the object from a given image.

Navigation and Collection Task Completion (10%). There are 11 target objects in the scene. Use the trained models to perform scene navigation and object collection. If you land close enough to the object it will be automatically captured and removed from the scene. You may compare the performance of both models.

Visualisation, Coding Style, and Readability (10%). Visualise the data and your experimental results wherever is appropriate. The code should be well structured with sufficient comments for the essential parts to make the implementation of your experiments easy to read and understand. Check the “Google Python Style Guide”3 for guidance.

2.2 Task 2 - Report Writing (50% subtotal)

You will also write a report (maximum five pages) on your work, which you will submit to Ultra alongside your code. The report must contain the following structure:

Introduction and Method (10%). Introduce the task and contextualise the given problem. Make sure to include a few references to previously published work in the field, where you should demon- strate an awareness of the relevant research works. Describe the model(s) and approaches you used to undertake the task. Any decisions on hyper-parameters must be stated here, including motivation for your choices where applicable. If the basis of your decision is experimentation with a number of parameters, then state this.

Result and Discussion(10)%). Describe, compare and contrast the results you obtained on your model(s). Any relationships in the data should be outlined and pointed out here. Only the most important conclusions should be mentioned in the text. By using tables and figures to support the section, you can avoid describing the results fully. Describe the outcome of the experiment and the conclusion that you can draw from these results.

Robot Design (20%). Consider designing an autonomous robot to undertake the given task in the real scene. Discuss the foreseen challenges and propose your design, including robot mechanic configuration, hardware and algorithms for robot sensing and controlling, and system efficiency etc. Provide appropriate justifications for your design choices with evidence from existing literature. You may use simulators such as “CoppeliaSim Edu” or “Gazebo” for visualising your design.

3 https://google.github.io/styleguide/pyguide.html

2

Format, Writing Style, and Presentation (10%). Language usage and report format should be in a professional standard and meet the academic writing criteria, with the explanation appropriately divided as per the structure described above. Tables, figures, and references should be included and cited where appropriate. A guide of citation style can be found at library guide4.

3 Learning Outcome

The following materials from lectures and lab practicals are closely relevant to this task:

1. Basic Deep Neural Networks - Image Classification.

2. Generic Visual Perception - Object Detection.

3. Deep Learning for Robotics Sensing and Controlling - Consideration for Robotic System Design.

The following key learning outcomes are assessed:

1. A critical understanding of the contemporary deep machine learning topics presented, and how these are applicable to relevant industrial problems and have future potential for emerging needs in both a research and industrial setting.

2. An advanced knowledge of the principles and practice of analysing relevant robotics and computer vision deep machine learning based algorithms for problem suitability.

3. Written communication, problem solving and analysis, computational thinking, and advanced pro- gramming skills.

The rubric and feedback sheet are attached at the end of this document.

4 https://libguides.durham.ac.uk/research_skills/managing_info/plagiarism 3

请加QQ:99515681 邮箱:99515681@qq.com WX:codinghelp

- 新手故事 他通过WhatsApp拉群营销工具成功吸引了海量客户 业务增长率高达90%

- Instagram私信软件 - ins引流神器/ig接粉软件/ins打粉软件

- 网络安全不可或缺:Telegram协议号注册器为您的通信保驾护航!

- 春日有礼,西部数据和“她”一起开启存储焕新计划

- instagram实时自动引流软件,ins营销群发必备软件

- 远光软件推出基于大模型训练的智能填单助手

- Instagram怎么自动群发私信,ins群发引流教学/测试联系大轩

- Ins高效筛选助手,Instagram群发代发工具,让你的营销更高效!

- Instagram营销群发软件,Ins高效筛选助手,助你快速营销!

- CS 455代做、Java编程语言代写

- 商业腾飞的关键 WhatsApp拉群工具揭示潮流趋势 让你的业务脱颖而出

- 星际商机的异度航程:2024年是否将是跨境电商 Telegram 群发云控冒险家踏上星际商机的异度航程

- 贵州桃花源新型材料有限公司负氧离子全屋整装增添了空间的独特韵味

- WhatsApp美国协议号/ws印尼号/ws全球混合号/ws群发

- 土蜂蜜供应商城:品味自然,健康之选

- Ins高效筛选助手,Instagram群发代发工具,让你的营销更高效!

- Instagram营销软件 - ins定位采集/ig私信博主/ins批量养号

- 社交宝盒,这个神秘而诱人的名字,对我而言,如同开启成功之门的金钥匙。WhatsApp拉群

- 狂欢启动!Fit.Q生酮必备好物,2件5折起!

- 什么群体需要代筛全球app,跨境Telegram群发云控创业者是否涉足了数字异界的奇幻之地

- WhatsApp协议号的用途,ws协议号怎么购买/ws协议号推荐

- 2024广东水展即将开幕 | 聚焦净水行业热点 抢占行业新机遇!

- Instagram引流神器 - ins采集软件/ig采集助手/ins群发助手

- Telegram/TG群发推广秘籍,电报/TG自动采集拉群软件,TG/纸飞机营销加速器

- 外贸探险家:WhatsApp拉群工具,我这个小白的外贸探险之旅

- WhatsApp协议号如何购买,ws协议号批量出售/ws劫持号/ws频道号推荐

- 星际商海的新星探:2024年是否有跨境电商 Telegram 群发云控冒险者成为星际商海的新星探,勘探新商机

- ELEC207代写、matlab设计编程代做

- 近视手术有几类,到底如何选?爱尔英智眼科医院周继红为您解答

- 喜极而泣 WhatsApp拉群营销工具让我的生意一夜之间大翻身

推荐

-

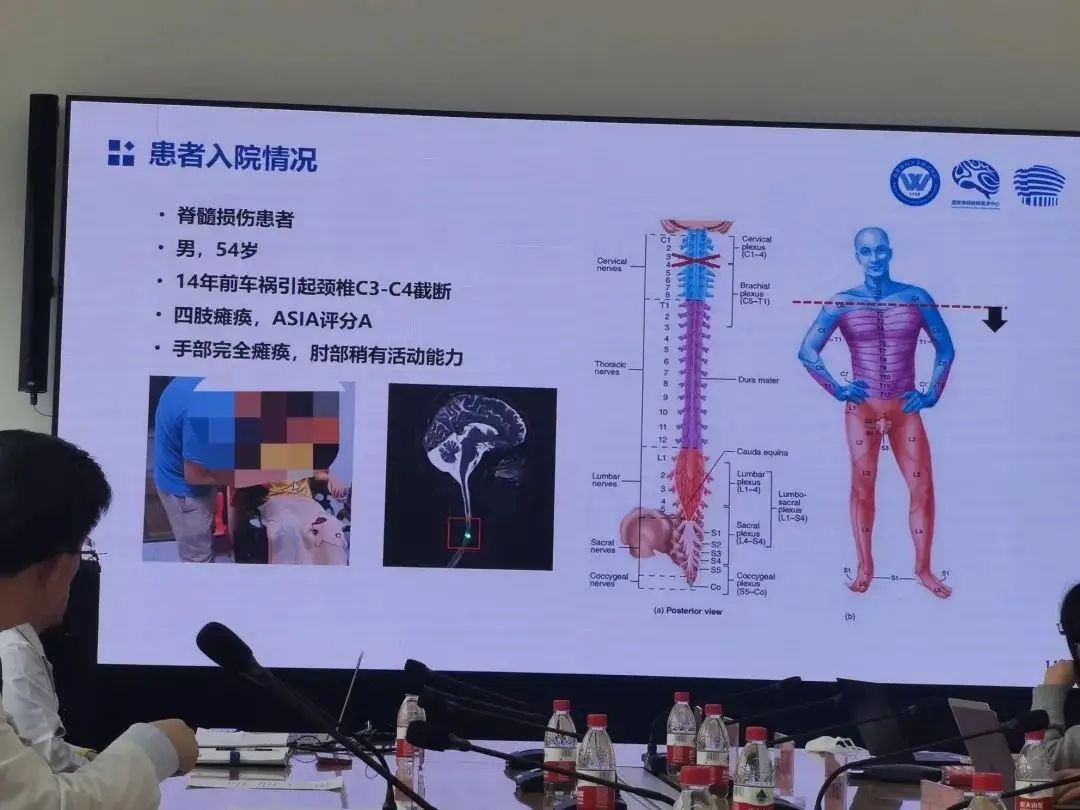

老杨第一次再度抓握住一瓶水,他由此产生了新的憧憬

瘫痪十四年后,老杨第一次再度抓握住一瓶水,他

科技

老杨第一次再度抓握住一瓶水,他由此产生了新的憧憬

瘫痪十四年后,老杨第一次再度抓握住一瓶水,他

科技

-

苹果罕见大降价,华为的压力给到了?

1、苹果官网罕见大降价冲上热搜。原因是苹

科技

苹果罕见大降价,华为的压力给到了?

1、苹果官网罕见大降价冲上热搜。原因是苹

科技

-

疫情期间 这个品牌实现了疯狂扩张

记得第一次喝瑞幸,还是2017年底去北京出差的

科技

疫情期间 这个品牌实现了疯狂扩张

记得第一次喝瑞幸,还是2017年底去北京出差的

科技

-

升级的脉脉,正在以招聘业务铺开商业化版图

长久以来,求职信息流不对称、单向的信息传递

科技

升级的脉脉,正在以招聘业务铺开商业化版图

长久以来,求职信息流不对称、单向的信息传递

科技

-

B站更新决策机构名单:共有 29 名掌权管理者,包括陈睿、徐逸、李旎、樊欣等人

1 月 15 日消息,据界面新闻,B站上周发布内部

科技

B站更新决策机构名单:共有 29 名掌权管理者,包括陈睿、徐逸、李旎、樊欣等人

1 月 15 日消息,据界面新闻,B站上周发布内部

科技

-

创意驱动增长,Adobe护城河够深吗?

Adobe通过其Creative Cloud订阅捆绑包具有

科技

创意驱动增长,Adobe护城河够深吗?

Adobe通过其Creative Cloud订阅捆绑包具有

科技

-

丰田章男称未来依然需要内燃机 已经启动电动机新项目

尽管电动车在全球范围内持续崛起,但丰田章男

科技

丰田章男称未来依然需要内燃机 已经启动电动机新项目

尽管电动车在全球范围内持续崛起,但丰田章男

科技

-

智慧驱动 共创未来| 东芝硬盘创新数据存储技术

为期三天的第五届中国(昆明)南亚社会公共安

科技

智慧驱动 共创未来| 东芝硬盘创新数据存储技术

为期三天的第五届中国(昆明)南亚社会公共安

科技

-

如何经营一家好企业,需要具备什么要素特点

我们大多数人刚开始创办一家企业都遇到经营

科技

如何经营一家好企业,需要具备什么要素特点

我们大多数人刚开始创办一家企业都遇到经营

科技

-

全力打造中国“创业之都”名片,第十届中国创业者大会将在郑州召开

北京创业科创科技中心主办的第十届中国创业

科技

全力打造中国“创业之都”名片,第十届中国创业者大会将在郑州召开

北京创业科创科技中心主办的第十届中国创业

科技